Multimodal Deep Learning and Vision-Language Models

New project where our PhD student Robin Hollifeldt and PostDoc Tianru Zhang will work towards conducting fundamental machine learning research and developing principled foundations of vision-language models. We will study how combining language with images/videos can lead to better representation of events around us, making AI models more capable and intelligent.

The project is a part of the Beijer Laboratory for Artificial Intelligence Research, funded by Kjell och Märta Beijer Foundation.

Supervisors (PhD student): Asst. Prof. Ekta Vats and Prof. Thomas Schön.

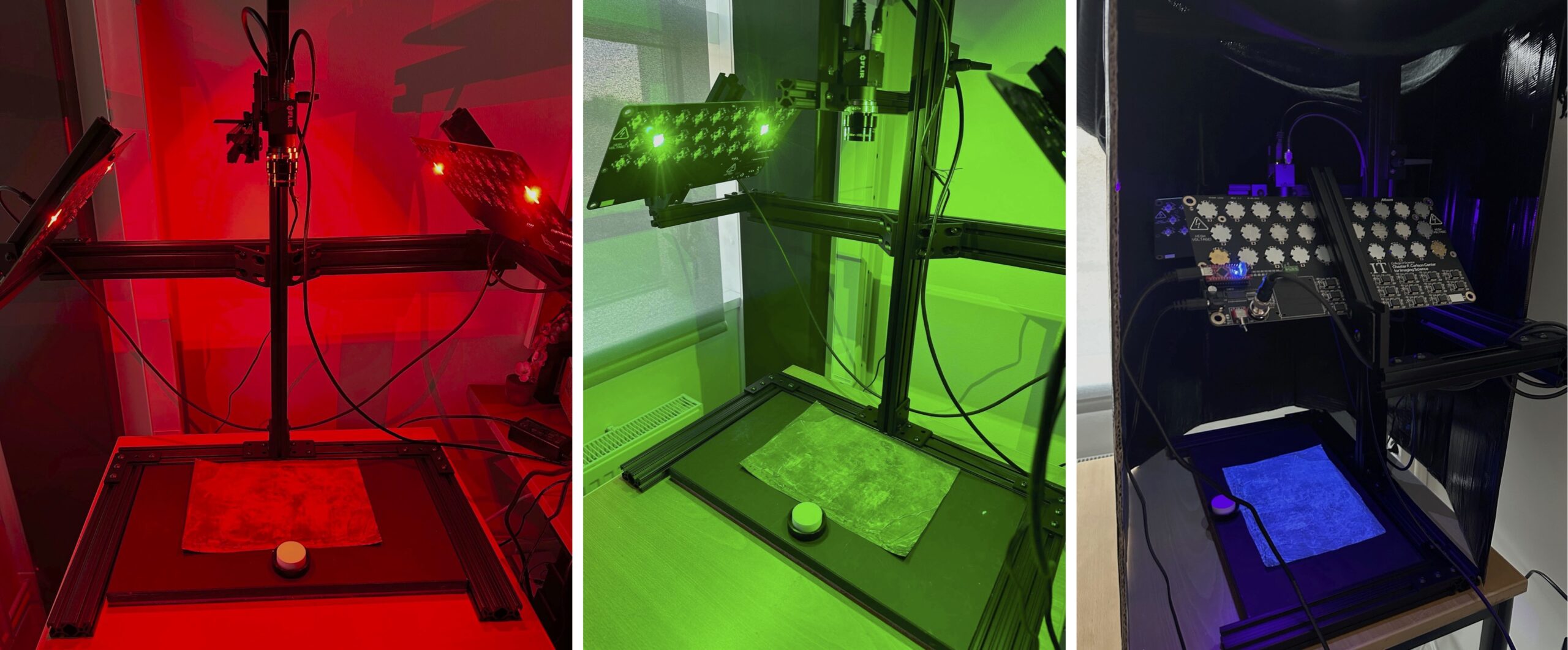

Multispectral Imaging

In collaboration with team MISHA at the Rochester Institute of Technology, Ekta Vats and her group have built a cost-effective MSI system in the lab that will enable research studies on degraded manuscripts. This pre-study was partially supported by the Kjell och Märta Beijer Foundation and our heartfelt gratitude to Prof. Thomas Schön for his encouragement and support. Together with the National Library of Sweden, we aim at potentially leading MSI-based digitisation and research on historical manuscripts in Sweden.